National Society for the Prevention of Cruelty to Children (NSPCC) calls out Facebook for its "significant failure in corporate responsibility." NSPCC is a children's charity organization based in the United Kingdom.

The National Society for the Prevention of Cruelty to Children (NSPCC) has called out Facebook for its "significant failure in corporate responsibility." NSPCC is a children's charity organization based in the United Kingdom.

After a significant drop occurred on its record of suicide and self-harm post removals, Facebook, which also owns Instagram, was thrown the bus.

As reported by the BBC, Facebook has removed over 1.3 million posts from the platform during the first quarter of 2020. The number dipped into "only" 277.400 in the second quarter, and it's problematic.

"Sadly, young people who needed protection from damaging content were let down by Instagram's steep reduction in takedowns of harmful suicide and self-harm posts," Andy Burrows, the charity's head of child safety online policy, told the British news portal.

It was not an easy decision for any party, given that most Facebook employees have been working at home in the wake of the current pandemic. Thankfully, the company is slowly returning to its pre-pandemic level.

Facebook's Moderators Lawsuit

Facebook claimed to have sent their employees to work from home until 2021.

On the contrary, a group of 200 moderators has recently signed an open letter to Facebook bosses. They accused Facebook of 'needlessly risking' their lives by making them report back at the office despite health concerns caused by COVID-19.

The 200 signees believe that not only does the firm has a somewhat toxic working culture, but it also burdens an increasing target amidst the pandemic with almost zero support.

Facebook denied the accusation and responded that they had held a private and internal discussion about the matter.

This lawsuit is not the only claim that Facebook's moderators have made against their employers.

Earlier this year, Facebook lost $52 million after losing a court battle and settling its employees who developed PTSD on the job. Moderators who have been working in California, Arizona, Texas, and Florida since 2015 were eligible for $1,000 payouts. In some severe cases, the number could skyrocket to $6,000,

A Wake-Up Call

In 2017, a 14-year-old British student Molly Russel took her own life. Her death was a wake-up call for tech giants to take more responsibility for their actions.

Russel posted a series of gruesome and concerning posts on Instagram about suicide and self-harm tendencies before her death.

Her father, Ian, called out Instagram for its failure to protect users. While he believes that no social media has ever intended its platform to be used in such a manner, harmful contents are always there, and it's up for the devs to tackle them.

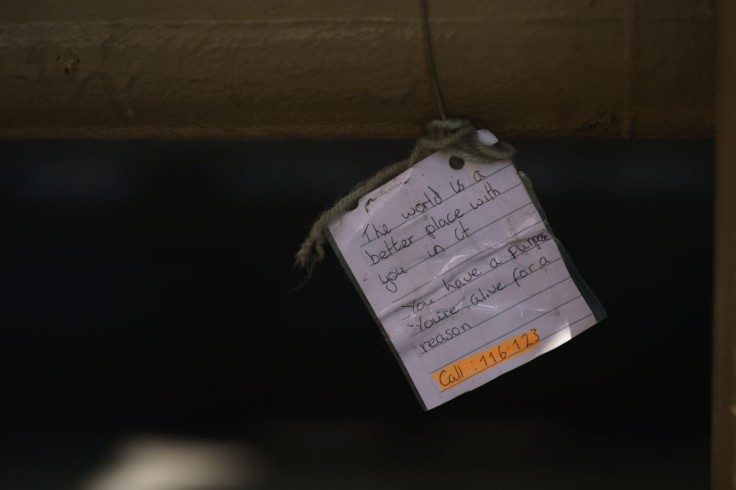

Earlier this year, several tech giants, including Facebook and Google, agree on the new guidelines by charitable organization Samaritans on dealing with harmful online content.