TikTok now has a new system to punish policy offenders with.

The popular social media platform recently revealed a new "strike" system that punishes would-be violators of its community guidelines.

TikTok's new policy is now live and active, giving strikes to people who have violated the platform's community guidelines.

TikTok Strike System Details

Tiktok Global Head of Product Policy Julie de Bailliencourt mentioned in a blog post that the platform has a new strike system that would prevent, discourage, and punish would-be policy offenders from violating its community guidelines.

According to the company's findings, its previous account enforcement system used different types of restrictions to prevent abuse of its product features while teaching people about its policies to reduce further violations. These restrictions include temporary bans from posting or commenting.

However, while this system was able to reduce harmful content overall, many creators found it confusing to navigate, while some found it can unfairly impact creators who rarely and unknowingly violate a policy, potentially making it less efficient at deterring people who repeatedly violate the platform's Community Guidelines.

Under TikTok's new strike system, guideline offenders will acquire a strike whenever they violate it while having the content that triggered the strike removed. Accumulating and reaching the threshold of strikes through the cumulative abuse of its product features or policy, such as bullying and harassment, would result in a permanent ban.

These strikes will expire from an account's record after 90 days or a month and a half. Interestingly, de Bailliencourt did not disclose the thresholds for each kind of violation.

However, not all violations hold the same strike impact. For example, a strike for promoting hateful ideologies has more of an impact than sharing low-harm spam. Meanwhile, severe violations like promoting or threatening violence, showing or aiding in the creation of child sexual abuse material, or showing real-world violence or torture get an immediate permanent ban.

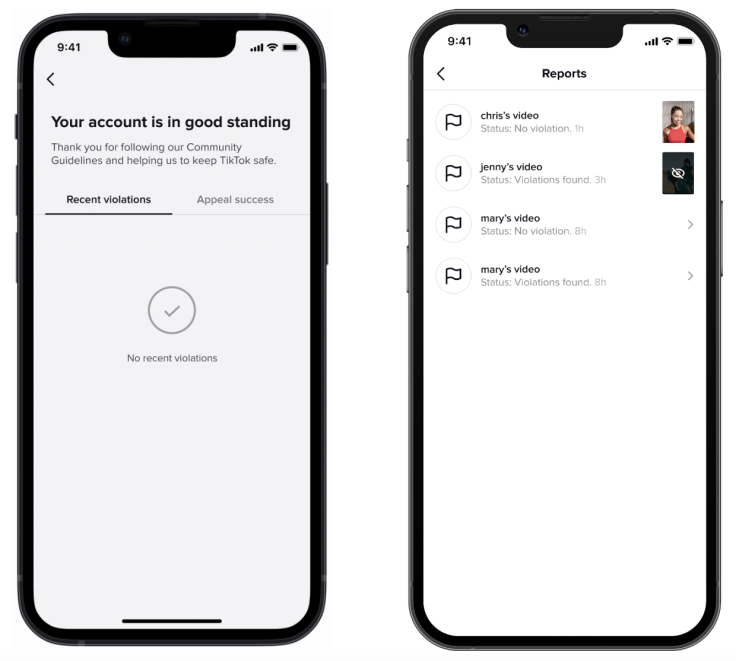

As part of its efforts to be transparent to users, TikTok added a feature that would let users see what kinds of violations they've received through an upcoming Safety Center tab with an "Account status" page that can be viewable in the app, per Gizmodo.

The Efforts To Moderate A Social Media Platform

This strike system didn't come to TikTok without cost. According to Engadget and Tech Crunch, TikTok received calls for transparency, simplicity, and better methods to handle content moderation and algorithmic recommendations.

To respond to these complaints, TikTok added a new feature that provides creators with information about which of their videos are marked as ineligible for recommendation to people's "For You" feeds and the reason behind the mark.

Creators can appeal to TikTok's content moderation team to remove said mark if they so choose.

Additionally, users and creators can use the recently added "Report records" page where they can see the status of the reports they've made on other content or accounts, allowing them to keep tabs on their reports.

Related Article : Congressional House Calls TikTok CEO To Testify In A March Hearing